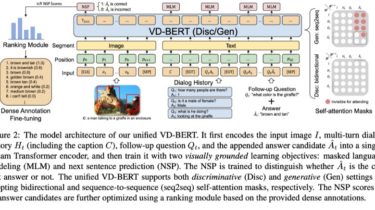

A Unified Vision and Dialog Transformer with BERT

VD-BERT PyTorch Code for the following paper at EMNLP2020:Title: VD-BERT: A Unified Vision and Dialog Transformer with BERT [pdf]Authors: Yue Wang, Shafiq Joty, Michael R. Lyu, Irwin King, Caiming Xiong, Steven C.H. HoiInstitute: Salesforce Research and CUHKAbstractVisual dialog is a challenging vision-language task, where a dialog agent needs to answer a series of questions through reasoning on the image content and dialog history. Prior work has mostly focused on various attention mechanisms to model such intricate interactions. By contrast, in […]

Read more