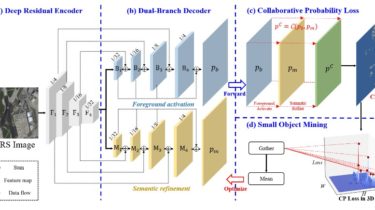

Foreground Activation Driven Small Object Semantic Segmentation in Large-Scale Remote Sensing Imagery

FactSeg FactSeg: Foreground Activation Driven Small Object Semantic Segmentation in Large-Scale Remote Sensing Imagery (TGRS) by Ailong Ma, Junjue Wang*, Yanfei Zhong* and Zhuo Zheng This is an official implementation of FactSeg in our TGRS paper “FactSeg: Foreground Activation Driven Small Object Semantic Segmentation in Large-Scale Remote Sensing Imagery“ Citation If you use FactSeg in your research, please cite our coming TGRS paper. @ARTICLE{FactSeg, author={Ma Ailong, Wang Junjue, Zhong Yanfei and Zheng Zhuo}, journal={IEEE Transactions on Geoscience and Remote Sensing}, […]

Read more