A PyTorch Implementation of Neural IMage Assessment

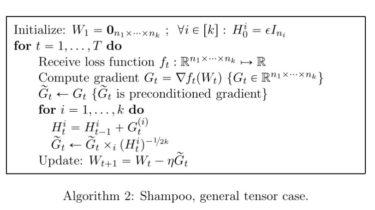

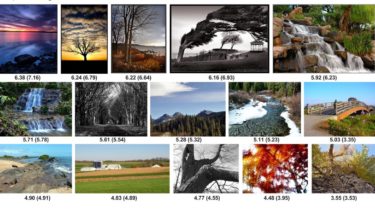

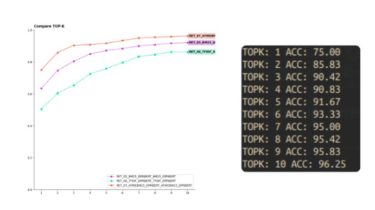

NIMA: Neural IMage Assessment This is a PyTorch implementation of the paper NIMA: Neural IMage Assessment (accepted at IEEE Transactions on Image Processing) by Hossein Talebi and Peyman Milanfar. You can learn more from this post at Google Research Blog. Implementation Details The model was trained on the AVA (Aesthetic Visual Analysis) dataset containing 255,500+ images. You can get it from here. Note: there may be some corrupted images in the dataset, remove them first before you start training. Use […]

Read more