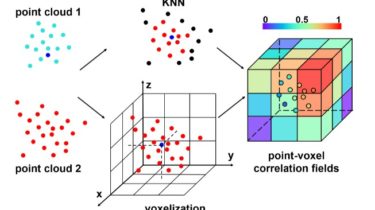

Point-Voxel Correlation Fields for Scene Flow Estimation of Point Clouds

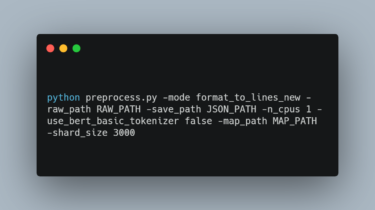

This repository contains the PyTorch implementation for paper “PV-RAFT: Point-Voxel Correlation Fields for Scene Flow Estimation of Point Clouds” (CVPR 2021)[arXiv] Installation Prerequisites Python 3.8 PyTorch 1.8 torch-scatter CUDA 10.2 RTX 2080 Ti tqdm, tensorboard, scipy, imageio, png conda create -n pvraft python=3.8 conda activate pvraft conda install pytorch torchvision torchaudio cudatoolkit=10.2 -c pytorch conda install tqdm tensorboard scipy imageio pip install pypng pip install torch-scatter -f https://pytorch-geometric.com/whl/torch-1.8.0+cu102.html Usage Data Preparation We follow HPLFlowNet to prepare FlyingThings3D and KITTI datasets. […]

Read more