A Python application to automatize the process of uploading files to Amazon S3

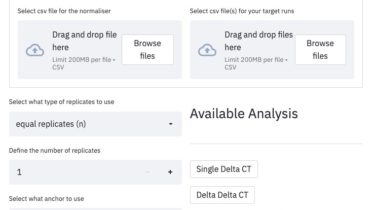

intelligent-s3-upload Upload files or folders (even with subfolders) to Amazon S3 in a totally automatized way taking advantage of: Amazon S3 Multipart Upload: The uploaded files are processed transparently in parts improving the throughput and the quick recovery from any network issues. Resilent Retry System: Intelligent S3 Upload has been built to detect any error during the uploading process and to perform any retries whenever is necessary. User Friendly Interface: Just check the demo to see with your own eyes […]

Read more