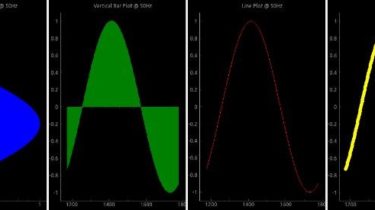

Pglive package adds support for thread-safe live plotting to pyqtgraph

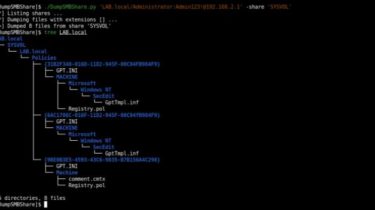

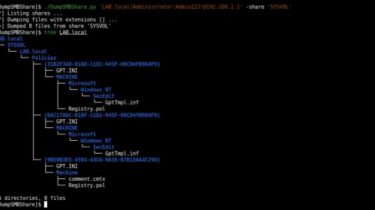

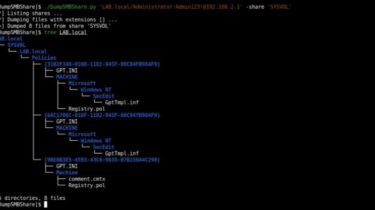

Pglive package adds support for thread-safe live plotting to pyqtgraph.It supports PyQt5 and PyQt6. By default, pyqtgraph doesn’t support live plotting.Aim of this package is to provide easy implementation of Line, Scatter and Bar Live plot.Every plot is connected with it’s DataConnector, which sole purpose is to consume data points and manage data re-plotting.DataConnector interface provides Pause and Resume method, update rate and maximum number of plotted points.Each time data point is collected, call DataConnector.cb_set_data or DataConnector.cb_append_data_point callback.That’s all You […]

Read more