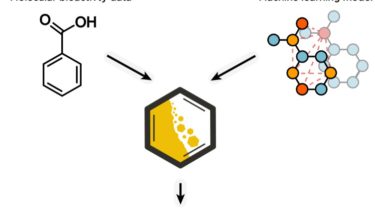

A tool for evaluating the predictive performance on activity cliff compounds of machine learning models

Molecule Activity Cliff Estimation (MoleculeACE) is a tool for evaluating the predictive performance on activity cliff compounds of machine learning models. MoleculeACE can be used to: Analyze and compare the performance on activity cliffs of machine learning methods typically employed in QSAR. Identify best practices to enhance a model’s predictivity in the presence of activity cliffs. Design guidelines to consider when developing novel QSAR approaches. Benchmark study In a benchmark study we collected and curated bioactivity data on 30 macromolecular […]

Read more