ExLlamaV2: The Fastest Library to Run LLMs

Quantizing Large Language Models (LLMs) is the most popular approach to reduce the size of these models and speed up inference. Among these techniques, GPTQ delivers amazing performance on GPUs. Compared to unquantized models, this method uses almost 3 times less VRAM while providing a similar level of accuracy and faster generation. It became so popular that it has recently been directly integrated into the transformers library. ExLlamaV2 is a library designed to squeeze even more performance out of GPTQ. […]

Read moreUsing Python for Data Analysis

Data analysis is a broad term that covers a wide range of techniques that enable you to reveal any insights and relationships that may exist within raw data. As you might expect, Python lends itself readily to data analysis. Once Python has analyzed your data, you can then use your findings to make good business decisions, improve procedures, and even make informed predictions based on what you’ve discovered. Before you start, you should familiarize yourself with Jupyter Notebook, a popular […]

Read moreCreate a Tic-Tac-Toe Python Game Engine With an AI Player

A classic childhood game is tic-tac-toe, also known as naughts and crosses. It’s simple and enjoyable, and coding a version of it with Python is an exciting project for a budding programmer. Now, adding some artificial intelligence (AI) using Python can make an old favorite even more thrilling. In this comprehensive tutorial, you’ll construct a flexible game engine. This engine will include an unbeatable computer player that employs the minimax algorithm to play tic-tac-toe flawlessly. Throughout the tutorial, you’ll explore […]

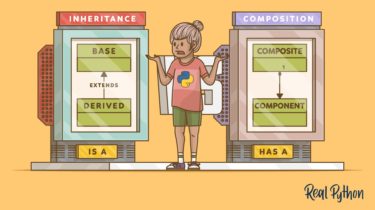

Read moreInheritance and Composition: A Python OOP Guide

In this tutorial, you’ll explore inheritance and composition in Python. Inheritance and composition are two important concepts in object-oriented programming that model the relationship between two classes. They’re the building blocks of object-oriented design, and they help programmers to write reusable code. What Are Inheritance and Composition? Inheritance and composition are two major concepts in object-oriented programming that model the relationship between two classes. They drive the design of an application and determine how the application should evolve as new […]

Read morePython range(): Represent Numerical Ranges

A range is a Python object that represents an interval of integers. Usually, the numbers are consecutive, but you can also specify that you want to space them out. You can create ranges by calling range() with one, two, or three arguments, as the following examples show: In each example, you use list() to explicitly list the individual elements of each range. You’ll study these examples in more detail soon. A range can sometimes be a powerful tool. However, throughout […]

Read moreNumPy 2 is coming: preventing breakage, updating your code

If you’re writing scientific or data science code with Python, there’s a good chance you’re using NumPy, directly or indirectly. Pandas, Scikit-Image, SciPy, Scikit-Learn, AstroPy… these and many other packages depend on NumPy. NumPy 2 is a new major release, with a release candidate coming out February 1st 2024, and a final release a month or two later. Importantly, it’s backwards incompatible; not in a major way, but enough that some work might be required to upgrade. And that means […]

Read morePython Basics Exercises: Functions and Loops

As you learned in Python Basics: Functions and Loops, functions serve as the fundamental building blocks in almost every Python program. They’re where the real action happens! You now know that functions are crucial for breaking down code into smaller, manageable chunks. They enable you to define actions that your program can execute repeatedly throughout your code. Instead of duplicating the same code whenever your program needs to accomplish a particular task, you can simply call the function. However, there […]

Read more