NLPBK at VLSP-2020 shared task: Compose transformer pretrained models for Reliable Intelligence Identification on Social network

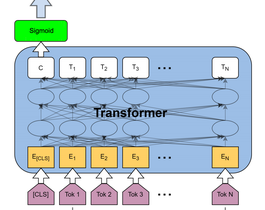

In Our model, we generate representations of post message in three methods: tokenized syllables-level text through Bert4News, tokenized word-level text through PhoBERT and tokenized syllables-level text through XLM. We simply concatenate both this three representations with the corresponding post metadata features. This can be considered as a naive model but are proved that can improve performance of systems (Tu et al. (2017), Thanh et al. (