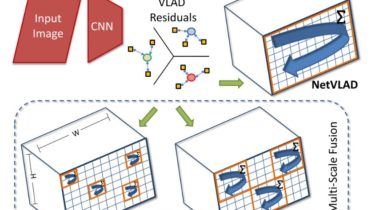

Multi-Scale Fusion of Locally-Global Descriptors for Place Recognition

Patch-NetVLAD

This repository contains code for the CVPR2021 paper “Patch-NetVLAD: Multi-Scale Fusion of Locally-Global Descriptors for Place Recognition”

The article can be found on arXiv and the official proceedings.

Installation

We recommend using conda (or better: mamba) to install all dependencies. If you have not yet installed conda/mamba, please download and install mambaforge.

conda create -n patchnetvlad python=3.8 numpy pytorch-gpu torchvision natsort tqdm opencv pillow scikit-learn faiss matplotlib-base -c conda-forge

conda activate patchnetvlad

We provide several pre-trained models and configuration files. The pre-trained models will be downloaded automatically into the pretrained_models the first time feature extraction is performed.

Alternatively, you can manually download the pre-trained models into a folder of your choice; click to expand if you want to do so.

If you want