Machine Translation Weekly 64: Non-autoregressive Models Strike Back

Half a year ago I featured here (MT Weekly

45) a paper that questions the

contribution of non-autoregressive models to

computational efficiency. It showed that a model with a deep encoder (that can

be parallelized) and a shallow decoder (that works sequentially) reaches the

same speed with much better translation quality than NAR models. A pre-print by

Facebook AI and CMU published on New Year’s Eve, Fully Non-autoregressive

Neural Machine Translation: Tricks of the

Trade, presents a new fully

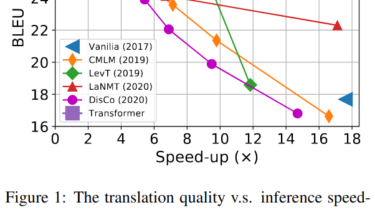

non-autoregressive model that seems to reach the same translation quality as

autoregressive models with 16× speed up. Indeed, the question is what would be

the difference they used a highly optimized implementation with all the tricks

people use in the WNGT Efficiency Shared

Task, but still, the