Machine Translation Weekly 62: The EDITOR

Papers about new models for sequence-to-sequence modeling have always been my

favorite genre. This week I will talk about a model called

EDITOR that was introduced in a pre-print

of a paper that will appear in the TACL

journal with authors from the University

of Maryland.

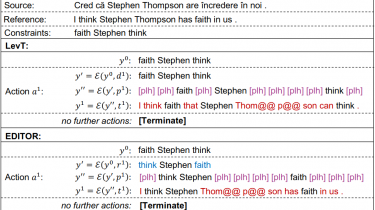

The model is based on the Levenshtein

Transformer, a partially non-autoregressive

model for sequence-to-sequence learning. Autoregressive models generate the

output left-to-right (or right-to-left), conditioning each step on the

previously generated token. On the other hand, non-autoregressive models assume

the probability of all target tokens is conditionally independent. The

generation can be thus heavily parallelized, which makes it very fast, but the

speed is typically reached at the expense of the translation quality. Getting

reasonable translation quality requires further tricks such as