Machine Translation Weekly 44: Tangled up in BLEU (and not blue)

For quite a while, machine translation is approached as a behaviorist

simulation. Don’t you know what a good translation is? It does not matter, you

can just simulate what humans do. Don’t you know how to measure if something is

a good translation? It does not matter, you can simulate what humans do again.

Things seem easy. We learn how to translate from tons of training data that

were translated by humans. When we want to measure how well the model simulates

human translation, we just measure the similarity between the model outputs and

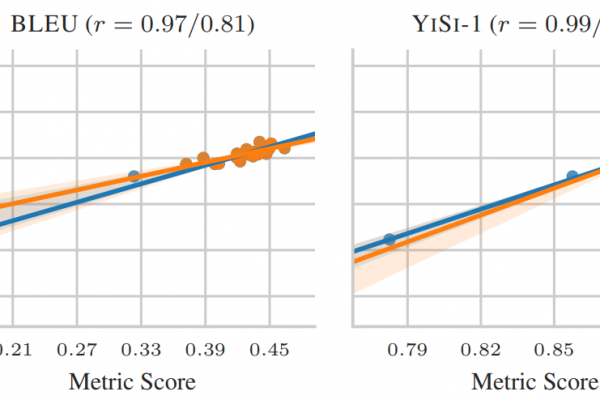

human translation. The only thing we need is a good sentence similarity measure

and in the realm of behaviorist simulation, a good similarity measure must

correlate well with human judgments about the translations. (So far, it ends

here, but maybe in the future, we will move the next level to develop an

evaluation metric for evaluation