LoRA: Low-Rank Adaptation of Large Language Models

LoRA

This repo contains the implementation of LoRA in GPT-2 and steps to replicate the results in our recent paper

LoRA: Low-Rank Adaptation of Large Language Models

Edward J. Hu*, Yelong Shen*, Phillip Wallis, Zeyuan Allen-Zhu, Yuanzhi Li, Shean Wang, Weizhu Chen

Paper: https://arxiv.org/abs/2106.09685

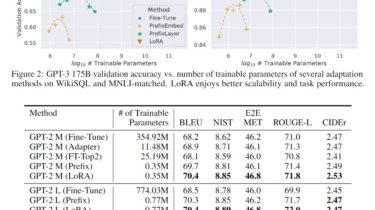

LoRA reduces the number of trainable parameters by learning pairs of rank-decompostion matrices and freezing the original weights. This vastly reduces the storage requirement for large language models adapted to specific tasks and enables efficient task-switching during deployment without introducing inference latency. LoRA also outperforms several other adaptation methods including prefix-tuning and fine-tuning.

Getting Started

- You can start with the following docker image:

nvcr.io/nvidia/pytorch:20.03-py3on a GPU-capable machine, but any generic PyTorch image should work.

docker run -it nvcr.io/nvidia/pytorch:20.03-py3

- Clone the repo and