Learning Versatile Neural Architectures by Propagating Network Codes

NCP

Mingyu Ding, Yuqi Huo, Haoyu Lu, Linjie Yang, Zhe Wang, Zhiwu Lu, Jingdong Wang, Ping Luo

Introduction

This work includes:

(1) NAS-Bench-MR, a NAS benchmark built on four challenging datasets under practical training settings for learning task-transferable architectures.

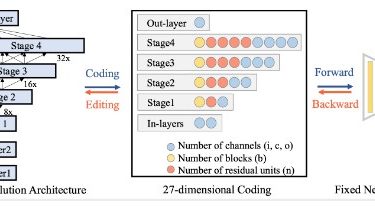

(2) An efficient predictor-based algorithm Network Coding Propagation (NCP), which back-propagates the gradients of neural predictors to directly update architecture codes along desired gradient directions for various objectives.

This framework is implemented and tested with Ubuntu/Mac OS, CUDA 9.0/10.0, Python 3, Pytorch 1.3-1.6, NVIDIA Tesla V100/CPU.

Dataset

We build our benchmark on four computer vision tasks, i.e., image classification (ImageNet), semantic segmentation (CityScapes), 3D detection (KITTI), and video recognition (HMDB51).

Totally 9 different settings are included, as shown in the data/*/trainval.pkl folders.

Note that each .pkl file contains more than 2500