Keeping Your Eye on the Ball Trajectory Attention in Video Transformers with python

Motionformer

This is an official pytorch implementation of paper Keeping Your Eye on the Ball: Trajectory Attention in Video Transformers. In this repository, we provide PyTorch code for training and testing our proposed Motionformer model. Motionformer use proposed trajectory attention to achieve state-of-the-art results on several video action recognition benchmarks such as Kinetics-400 and Something-Something V2.

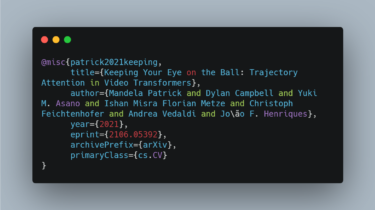

If you find Motionformer useful in your research, please use the following BibTeX entry for citation.

@misc{patrick2021keeping,

title={Keeping Your Eye on the Ball: Trajectory Attention in Video Transformers},

author={Mandela Patrick and Dylan Campbell and Yuki M. Asano and Ishan Misra Florian Metze and Christoph Feichtenhofer and Andrea Vedaldi and João F. Henriques},

year={2021},

eprint={2106.05392},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

We provide Motionformer models pretrained on Kinetics-400 (K400), Kinetics-600 (K600), Something-Something-V2 (SSv2), and Epic-Kitchens