How to Use StandardScaler and MinMaxScaler Transforms in Python

Last Updated on August 28, 2020

Many machine learning algorithms perform better when numerical input variables are scaled to a standard range.

This includes algorithms that use a weighted sum of the input, like linear regression, and algorithms that use distance measures, like k-nearest neighbors.

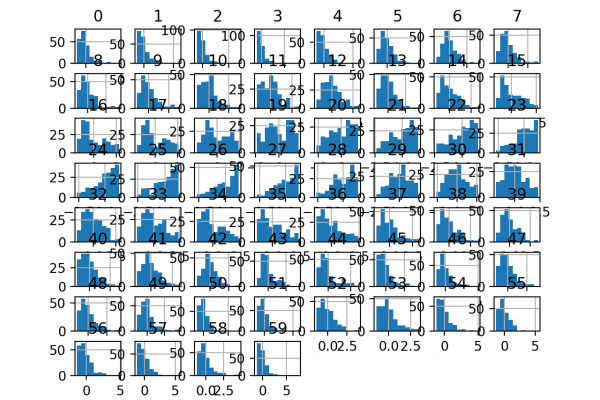

The two most popular techniques for scaling numerical data prior to modeling are normalization and standardization. Normalization scales each input variable separately to the range 0-1, which is the range for floating-point values where we have the most precision. Standardization scales each input variable separately by subtracting the mean (called centering) and dividing by the standard deviation to shift the distribution to have a mean of zero and a standard deviation of one.

In this tutorial, you will discover how to use scaler transforms to standardize and normalize numerical input variables for classification and regression.

After completing this tutorial, you will know:

- Data scaling is a recommended pre-processing step when working with many machine learning algorithms.

- Data scaling can be achieved by normalizing or standardizing real-valued input and output variables.

- How to apply standardization and normalization to improve the performance of predictive modeling algorithms.

Kick-start your project with my new book Data

To finish reading, please visit source site