How to Use Power Transforms for Machine Learning

Last Updated on August 28, 2020

Machine learning algorithms like Linear Regression and Gaussian Naive Bayes assume the numerical variables have a Gaussian probability distribution.

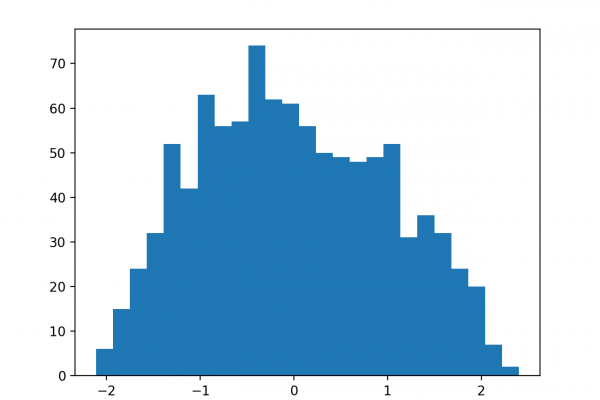

Your data may not have a Gaussian distribution and instead may have a Gaussian-like distribution (e.g. nearly Gaussian but with outliers or a skew) or a totally different distribution (e.g. exponential).

As such, you may be able to achieve better performance on a wide range of machine learning algorithms by transforming input and/or output variables to have a Gaussian or more-Gaussian distribution. Power transforms like the Box-Cox transform and the Yeo-Johnson transform provide an automatic way of performing these transforms on your data and are provided in the scikit-learn Python machine learning library.

In this tutorial, you will discover how to use power transforms in scikit-learn to make variables more Gaussian for modeling.

After completing this tutorial, you will know:

- Many machine learning algorithms prefer or perform better when numerical variables have a Gaussian probability distribution.

- Power transforms are a technique for transforming numerical input or output variables to have a Gaussian or more-Gaussian-like probability distribution.

- How to use the PowerTransform in scikit-learn to use the Box-Cox and Yeo-Johnson transforms

To finish reading, please visit source site