How to Use Discretization Transforms for Machine Learning

Last Updated on August 28, 2020

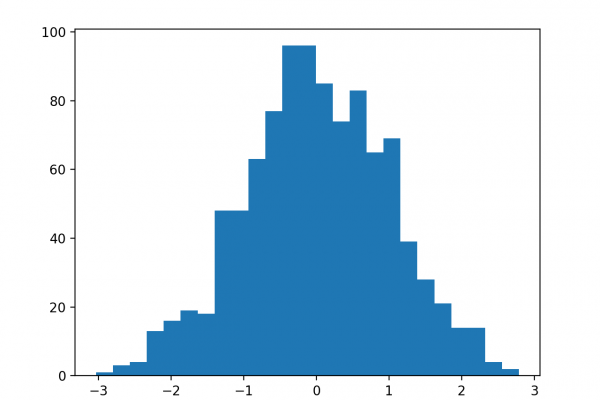

Numerical input variables may have a highly skewed or non-standard distribution.

This could be caused by outliers in the data, multi-modal distributions, highly exponential distributions, and more.

Many machine learning algorithms prefer or perform better when numerical input variables have a standard probability distribution.

The discretization transform provides an automatic way to change a numeric input variable to have a different data distribution, which in turn can be used as input to a predictive model.

In this tutorial, you will discover how to use discretization transforms to map numerical values to discrete categories for machine learning

After completing this tutorial, you will know:

- Many machine learning algorithms prefer or perform better when numerical with non-standard probability distributions are made discrete.

- Discretization transforms are a technique for transforming numerical input or output variables to have discrete ordinal labels.

- How to use the KBinsDiscretizer to change the structure and distribution of numeric variables to improve the performance of predictive models.

Kick-start your project with my new book Data Preparation for Machine Learning, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.