How to Reduce Overfitting With Dropout Regularization in Keras

Last Updated on August 25, 2020

Dropout regularization is a computationally cheap way to regularize a deep neural network.

Dropout works by probabilistically removing, or “dropping out,” inputs to a layer, which may be input variables in the data sample or activations from a previous layer. It has the effect of simulating a large number of networks with very different network structure and, in turn, making nodes in the network generally more robust to the inputs.

In this tutorial, you will discover the Keras API for adding dropout regularization to deep learning neural network models.

After completing this tutorial, you will know:

- How to create a dropout layer using the Keras API.

- How to add dropout regularization to MLP, CNN, and RNN layers using the Keras API.

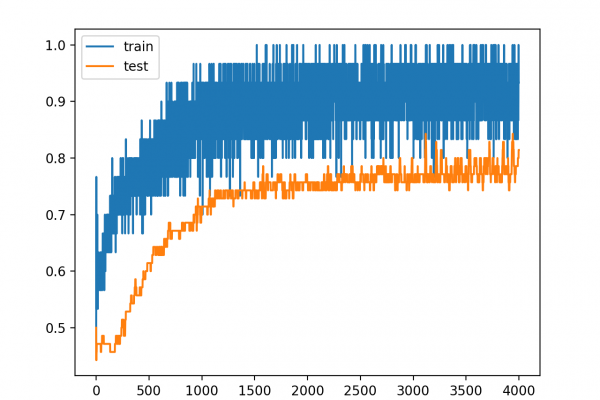

- How to reduce overfitting by adding a dropout regularization to an existing model.

Kick-start your project with my new book Better Deep Learning, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Updated Oct/2019: Updated for Keras 2.3 and TensorFlow 2.0.

To finish reading, please visit source site

To finish reading, please visit source site