How to Reduce Overfitting Using Weight Constraints in Keras

Last Updated on August 25, 2020

Weight constraints provide an approach to reduce the overfitting of a deep learning neural network model on the training data and improve the performance of the model on new data, such as the holdout test set.

There are multiple types of weight constraints, such as maximum and unit vector norms, and some require a hyperparameter that must be configured.

In this tutorial, you will discover the Keras API for adding weight constraints to deep learning neural network models to reduce overfitting.

After completing this tutorial, you will know:

- How to create vector norm constraints using the Keras API.

- How to add weight constraints to MLP, CNN, and RNN layers using the Keras API.

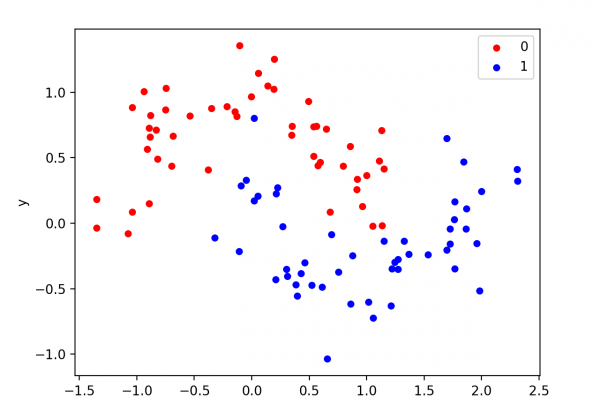

- How to reduce overfitting by adding a weight constraint to an existing model.

Kick-start your project with my new book Better Deep Learning, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Updated Mar/2019: fixed typo using equality instead of assignment in some usage examples.

- Updated Oct/2019: Updated for Keras 2.3 and TensorFlow 2.0.

To finish reading, please visit source site

To finish reading, please visit source site