How to Perform Feature Selection With Numerical Input Data

Last Updated on August 18, 2020

Feature selection is the process of identifying and selecting a subset of input features that are most relevant to the target variable.

Feature selection is often straightforward when working with real-valued input and output data, such as using the Pearson’s correlation coefficient, but can be challenging when working with numerical input data and a categorical target variable.

The two most commonly used feature selection methods for numerical input data when the target variable is categorical (e.g. classification predictive modeling) are the ANOVA f-test statistic and the mutual information statistic.

In this tutorial, you will discover how to perform feature selection with numerical input data for classification.

After completing this tutorial, you will know:

- The diabetes predictive modeling problem with numerical inputs and binary classification target variables.

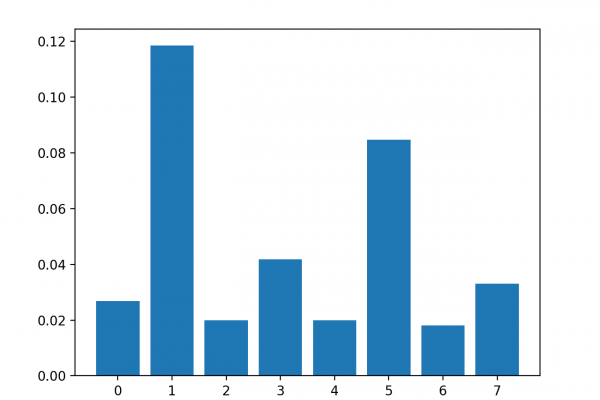

- How to evaluate the importance of numerical features using the ANOVA f-test and mutual information statistics.

- How to perform feature selection for numerical data when fitting and evaluating a classification model.

Kick-start your project with my new book Data Preparation for Machine Learning, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.