How to Perform Feature Selection for Regression Data

Last Updated on August 18, 2020

Feature selection is the process of identifying and selecting a subset of input variables that are most relevant to the target variable.

Perhaps the simplest case of feature selection is the case where there are numerical input variables and a numerical target for regression predictive modeling. This is because the strength of the relationship between each input variable and the target can be calculated, called correlation, and compared relative to each other.

In this tutorial, you will discover how to perform feature selection with numerical input data for regression predictive modeling.

After completing this tutorial, you will know:

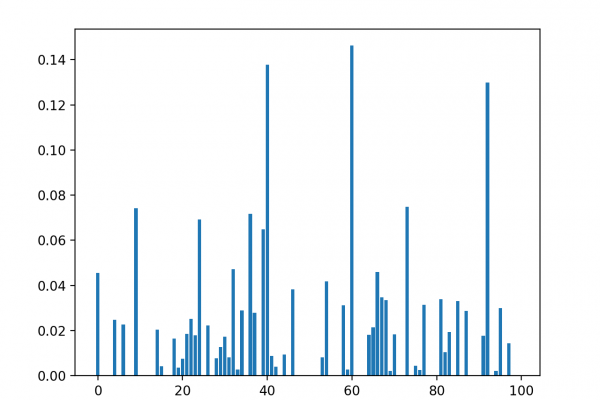

- How to evaluate the importance of numerical input data using the correlation and mutual information statistics.

- How to perform feature selection for numerical input data when fitting and evaluating a regression model.

- How to tune the number of features selected in a modeling pipeline using a grid search.

Kick-start your project with my new book Data Preparation for Machine Learning, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

To finish reading, please visit source site

To finish reading, please visit source site