How to Implement Gradient Descent Optimization from Scratch

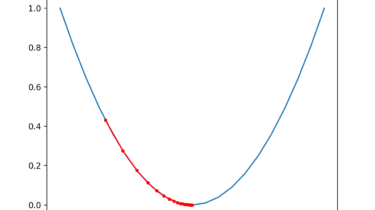

Gradient descent is an optimization algorithm that follows the negative gradient of an objective function in order to locate the minimum of the function.

It is a simple and effective technique that can be implemented with just a few lines of code. It also provides the basis for many extensions and modifications that can result in better performance. The algorithm also provides the basis for the widely used extension called stochastic gradient descent, used to train deep learning neural networks.

In this tutorial, you will discover how to implement gradient descent optimization from scratch.

After completing this tutorial, you will know:

- Gradient descent is a general procedure for optimizing a differentiable objective function.

- How to implement the gradient descent