How to Develop an AdaBoost Ensemble in Python

Last Updated on August 13, 2020

Boosting is a class of ensemble machine learning algorithms that involve combining the predictions from many weak learners.

A weak learner is a model that is very simple, although has some skill on the dataset. Boosting was a theoretical concept long before a practical algorithm could be developed, and the AdaBoost (adaptive boosting) algorithm was the first successful approach for the idea.

The AdaBoost algorithm involves using very short (one-level) decision trees as weak learners that are added sequentially to the ensemble. Each subsequent model attempts to correct the predictions made by the model before it in the sequence. This is achieved by weighing the training dataset to put more focus on training examples on which prior models made prediction errors.

In this tutorial, you will discover how to develop AdaBoost ensembles for classification and regression.

After completing this tutorial, you will know:

- AdaBoost ensemble is an ensemble created from decision trees added sequentially to the model

- How to use the AdaBoost ensemble for classification and regression with scikit-learn.

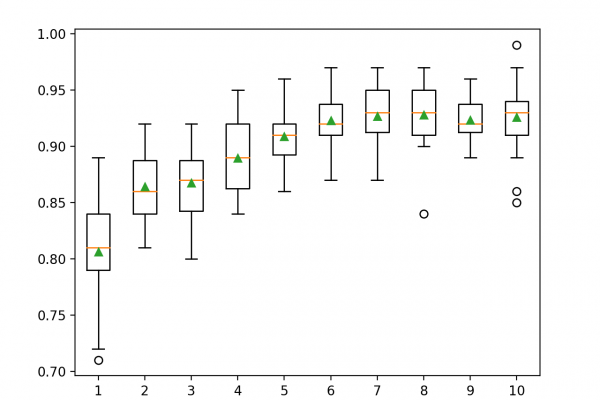

- How to explore the effect of AdaBoost model hyperparameters on model performance.

Let’s get started.

- Updated Aug/2020: Added example of grid searching model

To finish reading, please visit source site