How to Develop a Weighted Average Ensemble for Deep Learning Neural Networks

Last Updated on August 25, 2020

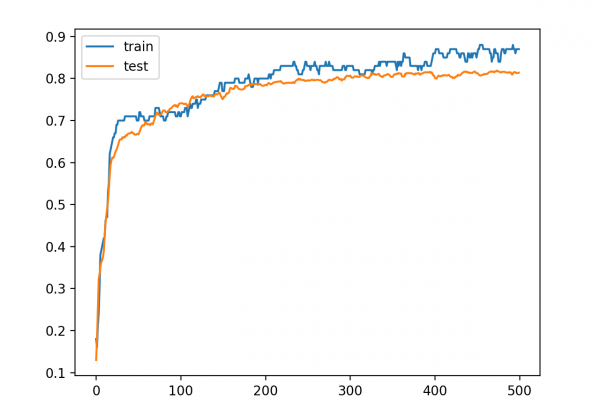

A modeling averaging ensemble combines the prediction from each model equally and often results in better performance on average than a given single model.

Sometimes there are very good models that we wish to contribute more to an ensemble prediction, and perhaps less skillful models that may be useful but should contribute less to an ensemble prediction. A weighted average ensemble is an approach that allows multiple models to contribute to a prediction in proportion to their trust or estimated performance.

In this tutorial, you will discover how to develop a weighted average ensemble of deep learning neural network models in Python with Keras.

After completing this tutorial, you will know:

- Model averaging ensembles are limited because they require that each ensemble member contribute equally to predictions.

- Weighted average ensembles allow the contribution of each ensemble member to a prediction to be weighted proportionally to the trust or performance of the member on a holdout dataset.

- How to implement a weighted average ensemble in Keras and compare results to a model averaging ensemble and standalone models.

Kick-start your project with my new book Better Deep Learning, including step-by-step tutorials and

To finish reading, please visit source site