How to Accelerate Learning of Deep Neural Networks With Batch Normalization

Last Updated on August 25, 2020

Batch normalization is a technique designed to automatically standardize the inputs to a layer in a deep learning neural network.

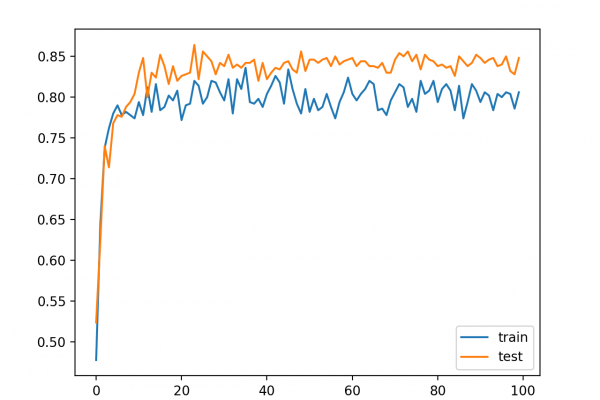

Once implemented, batch normalization has the effect of dramatically accelerating the training process of a neural network, and in some cases improves the performance of the model via a modest regularization effect.

In this tutorial, you will discover how to use batch normalization to accelerate the training of deep learning neural networks in Python with Keras.

After completing this tutorial, you will know:

- How to create and configure a BatchNormalization layer using the Keras API.

- How to add the BatchNormalization layer to deep learning neural network models.

- How to update an MLP model to use batch normalization to accelerate training on a binary classification problem.

Kick-start your project with my new book Better Deep Learning, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Updated Oct/2019: Updated for Keras 2.3 and TensorFlow 2.0.

To finish reading, please visit source site