Feature Importance and Feature Selection With XGBoost in Python

Last Updated on August 27, 2020

A benefit of using ensembles of decision tree methods like gradient boosting is that they can automatically provide estimates of feature importance from a trained predictive model.

In this post you will discover how you can estimate the importance of features for a predictive modeling problem using the XGBoost library in Python.

After reading this post you will know:

- How feature importance is calculated using the gradient boosting algorithm.

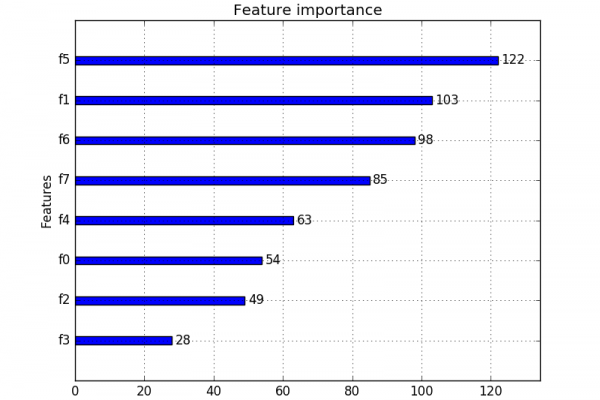

- How to plot feature importance in Python calculated by the XGBoost model.

- How to use feature importance calculated by XGBoost to perform feature selection.

Kick-start your project with my new book XGBoost With Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

- Update Jan/2017: Updated to reflect changes in scikit-learn API version 0.18.1.

- Update Mar/2018: Added alternate link to download the dataset as the original appears to have been taken down.

- Update Apr/2020: Updated example for XGBoost 1.0.2.

Feature Importance and Feature Selection With XGBoost

To finish reading, please visit source site