Exploring Hybrid CNN-transformers with Block-wisely Self-supervised Neural Architecture Search

BossNAS

This repository contains PyTorch code and pretrained models of our paper: BossNAS: Exploring Hybrid CNN-transformers with Block-wisely Self-supervised Neural Architecture Search.

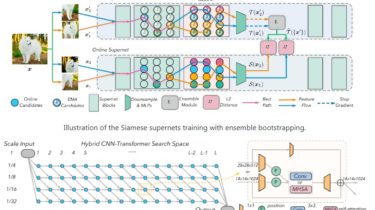

Illustration of the fabric-like Hybrid CNN-transformer Search Space with flexible down-sampling positions.

Our Results and Trained Models

-

Here is a summary of our searched models:

Model MAdds Steptime Top-1 (%) Top-5 (%) Url BossNet-T0 w/o SE 3.4B 101ms 80.5 95.0 checkpoint BossNet-T0 3.4B 115ms 80.8 95.2 checkpoint BossNet-T0^ 5.7B 147ms 81.6 95.6 same as above BossNet-T1 7.9B 156ms 81.9 95.6 checkpoint BossNet-T1^ 10.5B 165ms 82.2 95.7 same as above -

Here is a summary of architecture rating accuracy of our method:

Search space Dataset Kendall tau Spearman rho Pearson R MBConv ImageNet 0.65 0.78 0.85