End-to-end Point Cloud Correspondences with Transformers

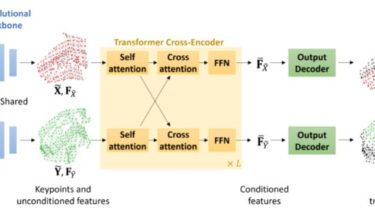

This repository contains the source code for REGTR. REGTR utilizes multiple transformer attention layers to directly predict each downsampled point’s corresponding location in the other point cloud. Unlike typical correspondence-based registration algorithms, the predicted correspondences are clean and do not require an additional RANSAC step. This results in a fast, yet accurate registration.

If you find this useful, please cite:

@inproceedings{yew2022regtr,

title={REGTR: End-to-end Point Cloud Correspondences with Transformers},

author={Yew, Zi Jian and Lee, Gim hee},

booktitle={CVPR},

year={2022},

}

Dataset environment

Our model is trained with the following environment:

Other required packages can