Cost-Sensitive Logistic Regression for Imbalanced Classification

Last Updated on August 28, 2020

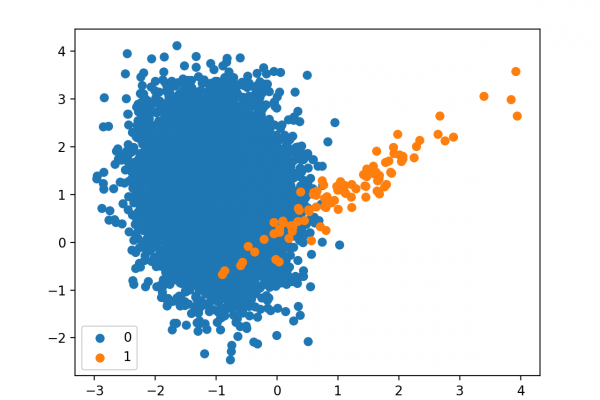

Logistic regression does not support imbalanced classification directly.

Instead, the training algorithm used to fit the logistic regression model must be modified to take the skewed distribution into account. This can be achieved by specifying a class weighting configuration that is used to influence the amount that logistic regression coefficients are updated during training.

The weighting can penalize the model less for errors made on examples from the majority class and penalize the model more for errors made on examples from the minority class. The result is a version of logistic regression that performs better on imbalanced classification tasks, generally referred to as cost-sensitive or weighted logistic regression.

In this tutorial, you will discover cost-sensitive logistic regression for imbalanced classification.

After completing this tutorial, you will know:

- How standard logistic regression does not support imbalanced classification.

- How logistic regression can be modified to weight model error by class weight when fitting the coefficients.

- How to configure class weight for logistic regression and how to grid search different class weight configurations.

Kick-start your project with my new book Imbalanced Classification with Python, including step-by-step tutorials and the Python source code files for

To finish reading, please visit source site