Cost-Sensitive Decision Trees for Imbalanced Classification

Last Updated on August 21, 2020

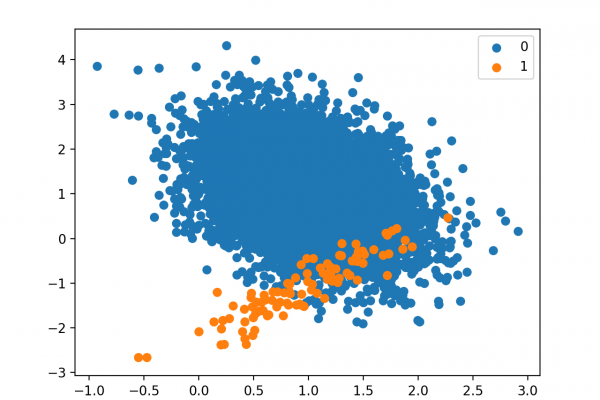

The decision tree algorithm is effective for balanced classification, although it does not perform well on imbalanced datasets.

The split points of the tree are chosen to best separate examples into two groups with minimum mixing. When both groups are dominated by examples from one class, the criterion used to select a split point will see good separation, when in fact, the examples from the minority class are being ignored.

This problem can be overcome by modifying the criterion used to evaluate split points to take the importance of each class into account, referred to generally as the weighted split-point or weighted decision tree.

In this tutorial, you will discover the weighted decision tree for imbalanced classification.

After completing this tutorial, you will know:

- How the standard decision tree algorithm does not support imbalanced classification.

- How the decision tree algorithm can be modified to weight model error by class weight when selecting splits.

- How to configure class weight for the decision tree algorithm and how to grid search different class weight configurations.

Kick-start your project with my new book Imbalanced Classification with Python, including step-by-step tutorials and the Python source code

To finish reading, please visit source site