Comparing 13 Algorithms on 165 Datasets (hint: use Gradient Boosting)

Last Updated on August 21, 2019

Which machine learning algorithm should you use?

It is a central question in applied machine learning.

In a recent paper by Randal Olson and others, they attempt to answer it and give you a guide for algorithms and parameters to try on your problem first, before spot checking a broader suite of algorithms.

In this post, you will discover a study and findings from evaluating many machine learning algorithms across a large number of machine learning datasets and the recommendations made from this study.

After reading this post, you will know:

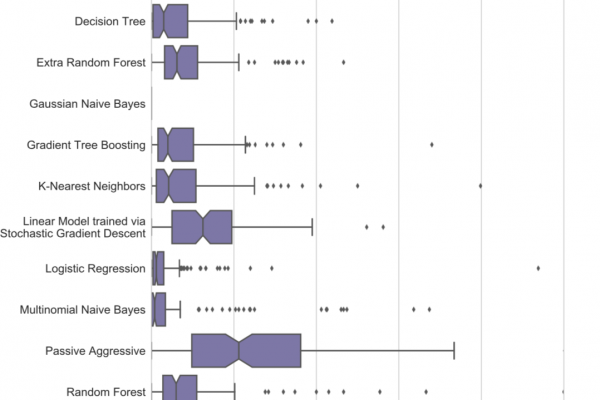

- That ensemble tree algorithms perform well across a wide range of datasets.

- That it is critical to test a suite of algorithms on a problem as there is no silver bullet algorithm.

- That it is critical to test a suite of configurations for a given algorithm as it can result in as much as a 50% improvement on some problems.

Kick-start your project with my new book XGBoost With Python, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

To finish reading, please visit source site

To finish reading, please visit source site