A command-line program to download media on OnlyFans

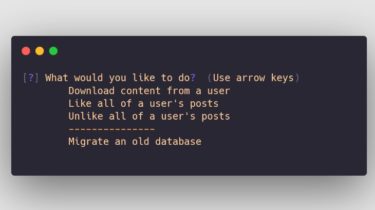

onlyfans-scraper A command-line program to download media, like posts, and more from creators on OnlyFans. Installation You can install this program by entering the following in your console: pip install onlyfans-scraper Setup Before you can fully use it, you need to fill out some fields in a auth.json file. This file will be created for you when you run the program for the first time. These are the fields: { “auth”: { “app-token”: “33d57ade8c02dbc5a333db99ff9ae26a”, “sess”: “”, “auth_id”: “”, “auth_uniq_”: “”, […]

Read more