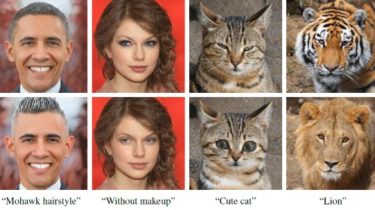

Face Stylization based on the paper “AgileGAN: Stylizing Portraits by Inversion-Consistent Transfer Learning”

English | 简体中文 Introduction This repo is an efficient toolkit for Face Stylization based on the paper “AgileGAN: Stylizing Portraits by Inversion-Consistent Transfer Learning”. We note that since the training code of AgileGAN is not released yet, this repo merely adopts the pipeline from AgileGAN and combines other helpful practices in this literature. This project is based on MMCV and MMGEN, star and fork is welcomed 🤗! Results from FaceStylor trained by MMGEN Requirements CUDA 10.0 / CUDA 10.1 Python […]

Read more