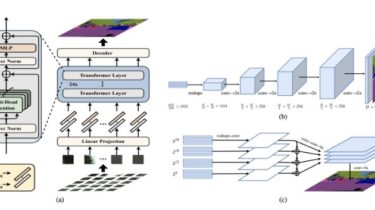

Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers

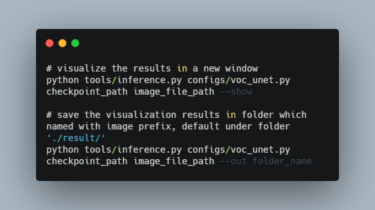

SETR – Pytorch Since the original paper (Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers.) has no official code,I implemented SETR-Progressive UPsampling(SETR-PUP) using pytorch. Original paper: Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers. Vit The Vit model is also implemented, and you can use it for image classification. Usage SETR from SETR.transformer_seg import SETRModel import torch if __name__ == “__main__”: net = SETRModel(patch_size=(32, 32), in_channels=3, out_channels=1, hidden_size=1024, num_hidden_layers=8, num_attention_heads=16, decode_features=[512, 256, 128, 64]) t1 = torch.rand(1, 3, […]

Read more