Category: QUESTION ANSWERING

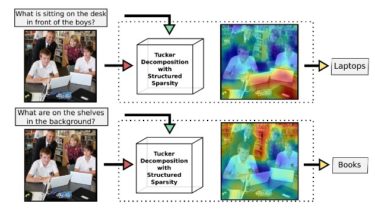

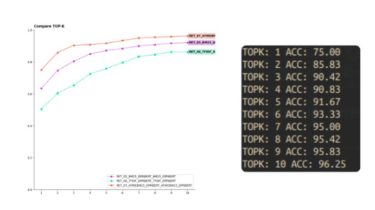

Visual Question Answering in Pytorch

/! New version of pytorch for VQA available here: https://github.com/Cadene/block.bootstrap.pytorch This repo was made by Remi Cadene (LIP6) and Hedi Ben-Younes (LIP6-Heuritech), two PhD Students working on VQA at UPMC-LIP6 and their professors Matthieu Cord (LIP6) and Nicolas Thome (LIP6-CNAM). We developed this code in the frame of a research paper called MUTAN: Multimodal Tucker Fusion for VQA which is (as far as we know) the current state-of-the-art on the VQA 1.0 dataset. The goal of this repo is two […]

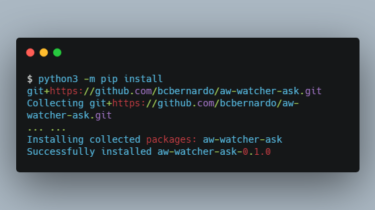

Read moreAn ActivityWatch watcher to pose questions to the user and record her answers

aw-watcher-ask An ActivityWatch watcher to pose questions to the user and record her answers. This watcher uses Zenity to present dialog boxes to the user, and stores her answers in a locally running instance of ActivityWatch. This can be useful to poll all sorts of information on a periodical or random basis. The inspiration comes from the experience sampling method (ESM) used in psychological studies, as well as from the quantified self movement. Install Using pip/pipx Create a virtual environment, […]

Read moreUnsupervised Multi-hop Question Answering by Question Generation

This repository contains code and models for the paper: Unsupervised Multi-hop Question Answering by Question Generation (NAACL 2021). We propose MQA-QG, an unsupervised question answering framework that can generate human-like multi-hop training pairs from both homogeneous and heterogeneous data sources. We find that we can train a competent multi-hop QA model with only generated data. The F1 gap between the unsupervised and fully-supervised models is less than 20 in both the HotpotQA and the HybridQA dataset. Pretraining a multi-hop QA […]

Read moreBaseline code for Korean open domain question answering

Open-Domain Question Answering(ODQA)는 다양한 주제에 대한 문서 집합으로부터 자연어 질의에 대한 답변을 찾아오는 task입니다. 이때 사용자 질의에 답변하기 위해 주어지는 지문이 따로 존재하지 않습니다. 따라서 사전에 구축되어있는 Knowledge Resource(본 글에서는 한국어 Wikipedia)에서 질문에 대답할 수 있는 문서를 찾는 과정이 필요합니다. VumBleBot은 ODQA 문제를 해결하기 위해 설계되었습니다. 질문에 관련된 문서를 찾아주는 Retriever, 관련된 문서를 읽고 간결한 답변을 내보내주는 Reader가 구현되어 있습니다. 이 두 단계를 거쳐 만들어진 VumBleBot은 어떤 어려운 질문을 던져도 척척 답변을 해주는 질의응답 시스템입니다. bookmark_tabs Wrap-up report에 모델, 실험 […]

Read moreParsiNLU: A Suite of Language Understanding Challenges for Persian

Task:* ———Machine TranslationQuestion AnsweringPersian Sentiment AnalysisNatural Language UnderstandingNatural Language InferenceReading Comprehension

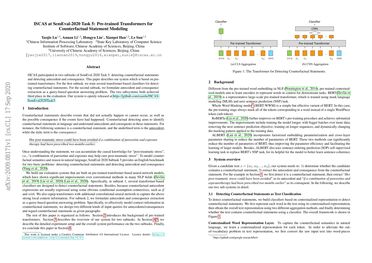

Read moreISCAS at SemEval-2020 Task 5: Pre-trained Transformers for Counterfactual Statement Modeling

ISCAS participated in two subtasks of SemEval 2020 Task 5: detecting counterfactual statements and detecting antecedent and consequence. This paper describes our system which is based on pre-trained transformers… For the first subtask, we train several transformer-based classifiers for detecting counterfactual statements. For the second subtask, we formulate antecedent and consequence extraction as a query-based question answering problem. The two subsystems both achieved third place in the evaluation. Our system is openly released at https://github.com/casnlu/ISCAS-SemEval2020Task5. (read more) PDF Abstract Visit […]

Read more