Document Classification using NLP

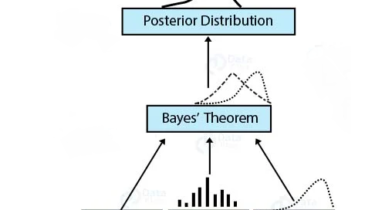

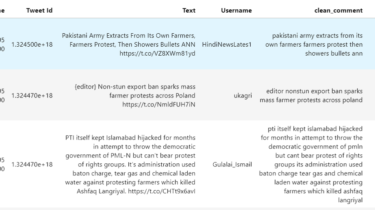

Perform document classification into four defined categories (World, Sports, Business, Sci/Tech). Compare the classifier accuracy with different models ranging from Naïve Bayes to Convolutional Neural Network (CNN) and RCNN. By making use of different feature engineering techniques and extra Natural Language Processing (NLP) features create an accurate text classifier. Document/Text classification is an important task that

Read more