Machine Translation Weekly 48: MARGE

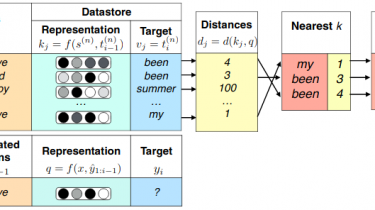

This week, I will comment on a recent pre-print by Facebook AI titled Pre-training via Paraphrasing. The paper introduces a model called MARGE (indeed, they want to say it belongs to the same family as BART by Facebook) that uses a clever way of denoising as a training objective for the representation. Most of the currently used pre-trained models are based on some de-noising. We sample some noise in the input and want the model to get rid of it […]

Read more