Machine Translation of Novels in the Age of Transformer

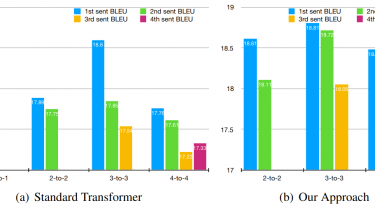

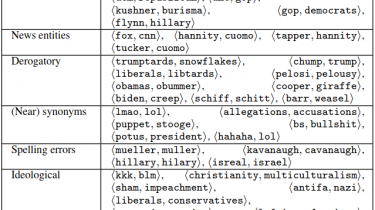

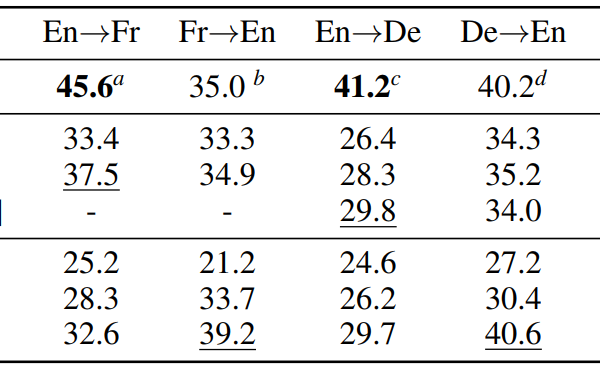

In this chapter we build a machine translation (MT) system tailored to the literary domain, specifically to novels, based on the state-of-the-art architecture in neural MT (NMT), the Transformer (Vaswani et al., 2017), for the translation direction English-to-Catalan. Subsequently, we assess to what extent such a system can be useful by evaluating its translations, by comparing this MT system against three other systems (two domain-specific systems under the recurrent and phrase-based paradigms and a popular generic on-line system) on three […]

Read more