Issue #116 – Fully Non-autoregressive Neural Machine Translation

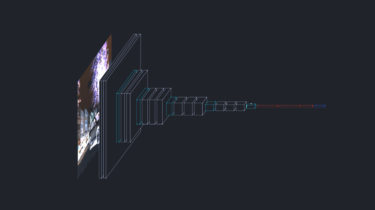

04 Feb21 Issue #116 – Fully Non-autoregressive Neural Machine Translation Author: Dr. Patrik Lambert, Senior Machine Translation Scientist @ Iconic Introduction The standard Transformer model is autoregressive (AT), which means that the prediction of each target word is based on the predictions for the previous words. The output is generated from left to right, a process which cannot be parallelised because the prediction probability of a token depends on previous tokens. In the last few years, new approaches have been […]

Read more