Cd2CR: Co-reference Resolution Across Documents and Domains

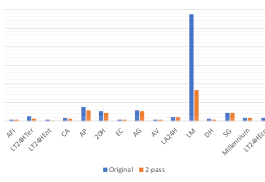

Test Type Co-referent? Pass Rate & Total Tests Example test case and outcome for test case Anaphora and Exophora resolution Yes 47.1% (16/34) M1: …to boost the struggling insect’s numbers… [PASS] M2: the annual migration of the monarch butterfly… No

Read more