Category: NLP

Articles About Natural Language Processing

A Gentle Introduction to Deep Neural Networks with Python

This is a guest post from Andrew Ferlitsch, author of Deep Learning Patterns and Practices. It provides an introduction to deep neural networks in Python. Andrew is an expert on computer vision, deep learning, and operationalizing ML in production at Google Cloud AI Developer Relations. This article examines the parts that make up neural networks and deep neural networks, as well as the fundamental different types of models (e.g. regression), their constituent parts (and how they

Read moreWill AI Kill Your Job?

Oh, what will happen to our jobs when my company starts using AI? This is such a popular question that comes up when I give talks—even to a pseudo technical audience. Not surprisingly, this question will land sarcastic smiles on the faces of some data scientists and AI experts; you may even catch some eyes rolling. But it’s a valid question. Don’t forget, we had a US political candidate run for the presidency on the theme that automation is robbing […]

Read moreML and NLP Research Highlights of 2021

Credit for the title image: Liu et al. (2021) 2021 saw many exciting advances in machine learning (ML) and natural language processing (NLP). In this post, I will cover the papers and research areas that I found most inspiring. I tried to cover the papers that I was aware of but likely missed many relevant ones. Feel free to highlight them as well as ones that you found inspiring in the comments. I discuss the following highlights: Universal Models Massive […]

Read moreThe NLP Cypher | 01.23.22

The UnifiedSKG framework, which unifies 21 SKG tasks into the text-to-text format, aiming to promote systematic SKG research – instead of being exclusive to a single task, domain, or dataset. It shows that large language models like T5, with simple modification when necessary, achieve state-of-the-art performance on nearly all 21 tasks.

Read moreThe NLP Cypher | 12.26.21

Merry Christmas 🎄 for those celebrating. And Happy New Year! Even OpenAI is feeling the holiday spirit: they open sourced their photorealistic GLIDE model several days ago. Includes three notebooks: The text2im notebook shows how to use GLIDE (filtered) with classifier-free guidance to produce images conditioned on text prompts. The inpaint notebook shows how to use GLIDE (filtered) to fill in a masked region of an image, conditioned on a text prompt. The clip_guided notebook shows how to use GLIDE […]

Read moreThe NLP Cypher | 12.12.21

Here’s a collection of papers by your favorite big tech and educational institutions. “The Generalist Language Model (GLaM), a trillion weight model that can be trained and served efficiently (in terms of computation and energy use) thanks to sparsity, and achieves competitive performance on multiple few-shot learning tasks. GLaM’s performance compares favorably to a dense language model, GPT-3 (175B) with significantly improved learning efficiency across 29 public NLP benchmarks in seven categories, spanning language completion, open-domain question answering, and natural […]

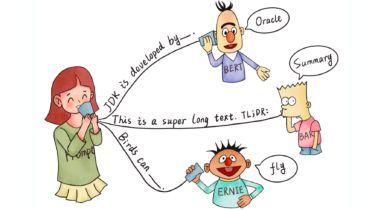

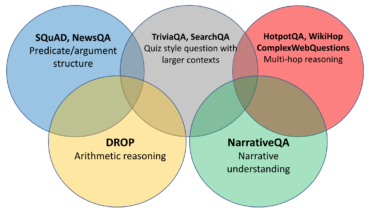

Read moreMulti-domain Multilingual Question Answering

This post expands on the EMNLP 2021 tutorial on Multi-domain Multilingual Question Answering. The tutorial was organised by Avi Sil and me. In this post, I highlight key insights and takeaways of the tutorial. The slides are available online. You can find the table of contents below: Introduction Open-Retrieval QA vs Reading Comprehension What is a Domain? Multi-Domain QA Datasets for Multi-Domain QA Multi-Domain QA Models Multilingual QA Datasets for Multilingual QA Multilingual QA Models Open Research Directions Question answering […]

Read moreInterpretable agent communication from scratch (with a generic visual processor emerging on the side)

Abstract As deep networks begin to be deployed as autonomous agents, the issue of how they can communicate with each other becomes important. Here, we train two deep nets from scratch to perform large-scale referent identification through unsupervised emergent communication. We show that the partially interpretable emergent protocol allows the nets to successfully communicate even about object classes they did not see at training time. The visual representations induced as a by-product of our training regime, moreover, when re-used as […]

Read morePay Better Attention to Attention: Head Selection in Multilingual and Multi-Domain Sequence Modeling

Abstract Multi-head attention has each of the attention heads collect salient information from different parts of an input sequence, making it a powerful mechanism for sequence modeling. Multilingual and multi-domain learning are common scenarios for sequence modeling, where the key challenge is to maximize positive transfer and mitigate negative interference across languages and domains. In this paper, we find that non-selective attention sharing is sub-optimal for achieving good generalization across all languages and domains. We further propose attention sharing strategies […]

Read more