Machine Translation Weekly 54: Nearest Neighbor MT

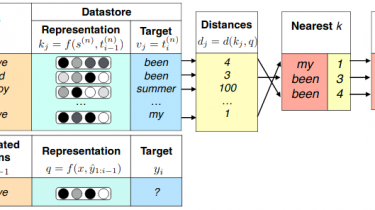

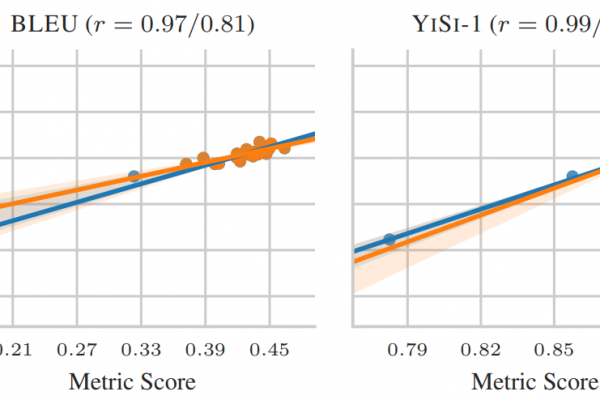

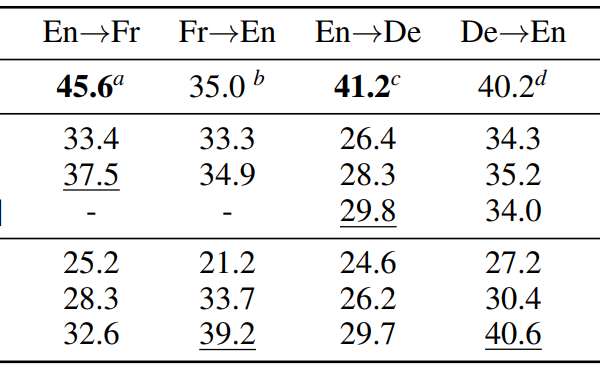

This week, I will discuss Nearest Neighbor Machine Translation, a paper from this year ICML that takes advantage of overlooked representation learning capabilities of machine translation models. This paper’s idea is pretty simple and is basically the same as in the previous work on nearest neighbor language models. The paper implicitly argues (or at least I think it does) that the final softmax layer of the MT models is too simplifying and thus pose a sort of information bottleneck, even […]

Read more