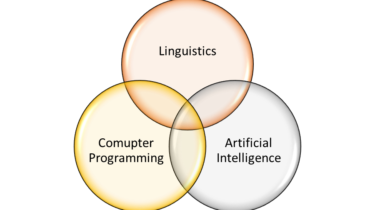

NLP Landscape from 1960s to 2022 — An Introduction of NLP & its history

Hello, welcome all! Today I will give a brief introduction on very interesting and trending topic which Natural Language Processing. Let’s understand NLP — What is NLP? Some Real-World Applications

Read more