Dataset for eye-tracking tasks

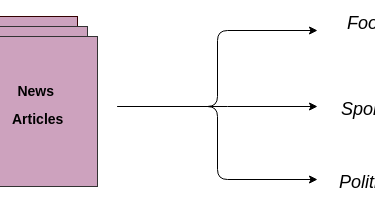

In recent years many different deep neural networks were developed, but due to a large number of layers in deep networks, their training requires a long time and a large number of datasets. Today is popular to use trained deep neural networks for various tasks, even for simple ones in which such deep networks are not required… The well-known deep networks such as YoloV3, SSD, etc. are intended for tracking and monitoring various objects, therefore their weights are heavy and […]

Read more