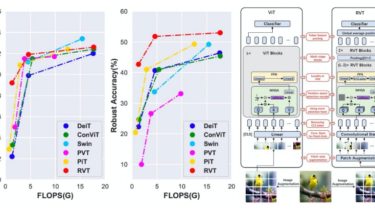

Rethinking the Design Principles of Robust Vision Transformer

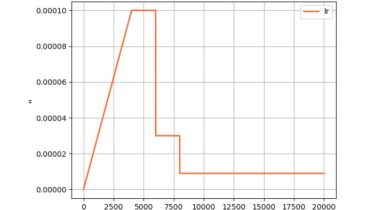

Robust-Vision-Transformer Note: Since the model is trained on our private platform, this transferred code has not been tested and may have some bugs. If you meet any problems, feel free to open an issue! This repository contains PyTorch code for Robust Vision Transformers. For details see our paper “Rethinking the Design Principles of Robust Vision Transformer” First, clone the repository locally: git clone https://github.com/vtddggg/Robust-Vision-Transformer.git Install PyTorch 1.7.0+ and torchvision 0.8.1+ and pytorch-image-models 0.3.2: conda install -c pytorch pytorch torchvision pip […]

Read more