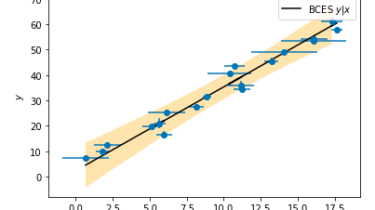

Linear regression for data with measurement errors and intrinsic scatter

BCES Python module for performing robust linear regression on (X,Y) data points where both X and Y have measurement errors. The fitting method is the bivariate correlated errors and intrinsic scatter (BCES) and follows the description given in Akritas & Bershady. 1996, ApJ. Some of the advantages of BCES regression compared to ordinary least squares fitting (quoted from Akritas & Bershady 1996): it allows for measurement errors on both variables it permits the measurement errors for the two variables to […]

Read more