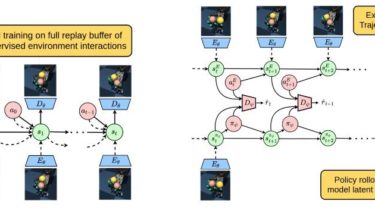

Visual Adversarial Imitation Learning using Variational Models (VMAIL)

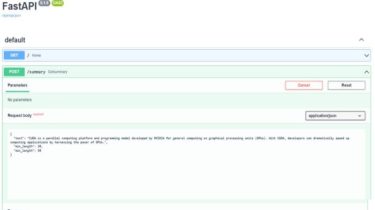

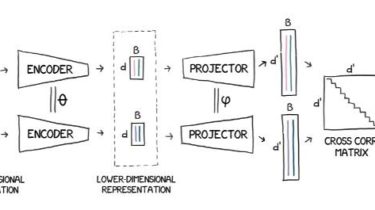

This is the official implementation of the NeurIPS 2021 paper. Method VMAIL simultaneously learns a variational dynamics model and trains an on-policyadversarial imitation learning algorithm in the latent space using only model-basedrollouts. This allows for stable and sample efficient training, as well as zero-shotimitation learning by transfering the learned dynamics model Instructions Get dependencies: conda env create -f vmail.yml conda activate vmail cd robel_claw/robel pip install -e . To train agents for each environmnet download the expert data from the […]

Read more