Develop k-Nearest Neighbors in Python From Scratch

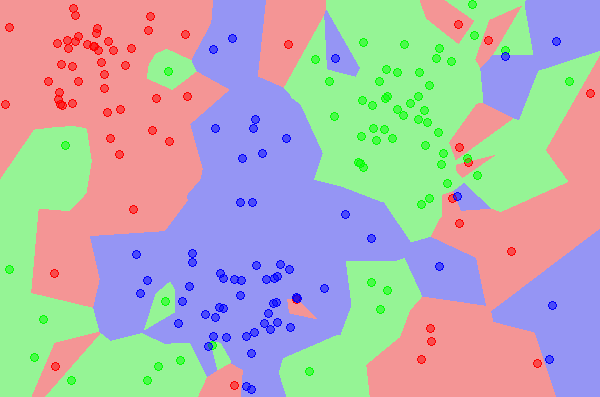

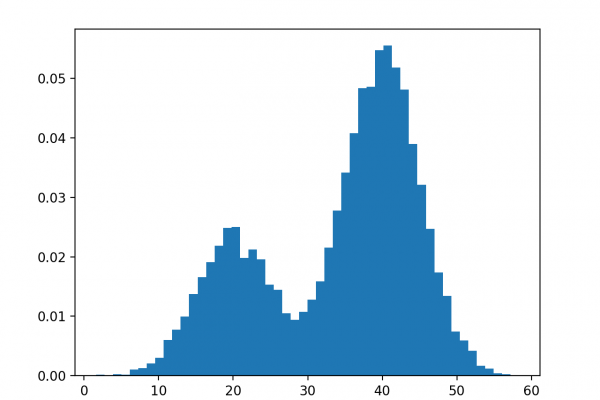

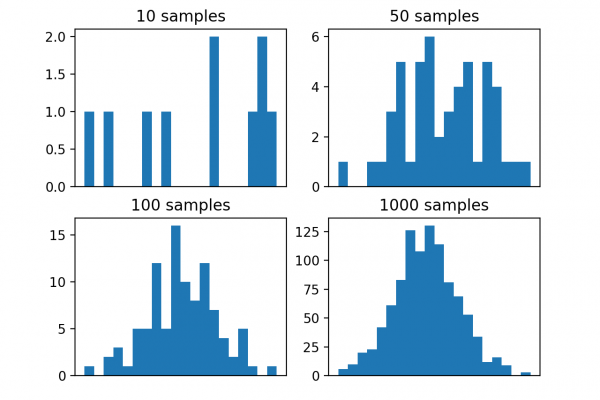

Last Updated on February 24, 2020 In this tutorial you are going to learn about the k-Nearest Neighbors algorithm including how it works and how to implement it from scratch in Python (without libraries). A simple but powerful approach for making predictions is to use the most similar historical examples to the new data. This is the principle behind the k-Nearest Neighbors algorithm. After completing this tutorial you will know: How to code the k-Nearest Neighbors algorithm step-by-step. How to evaluate […]

Read more